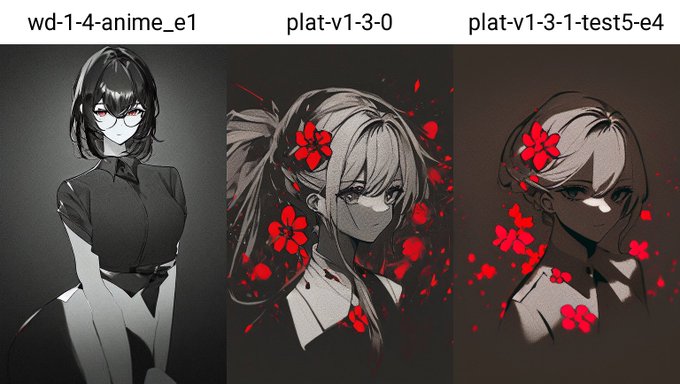

datasetのTwitterイラスト検索結果。 240 件中 2ページ目

@noop_noob test5 has slightly more training images than 1.3.0, and the output is close to 1.3.0, but degraded.

In 1.3.0, I did a 512x512 cropping step, but in test5 I did not do that, but used aspect ratio bucketing.

Maybe the same procedure with the exact same dataset would reproduce 1.3.0

@noop_noob Yes...

I am currently testing a WD1.4 based model that dataset with different contents to verify why v1.3.0's outputs are so nicely.

Even if I increase the number of training images, I cannot exceed the miraculously created 1.3.0. I cannot reproduce it... 😭

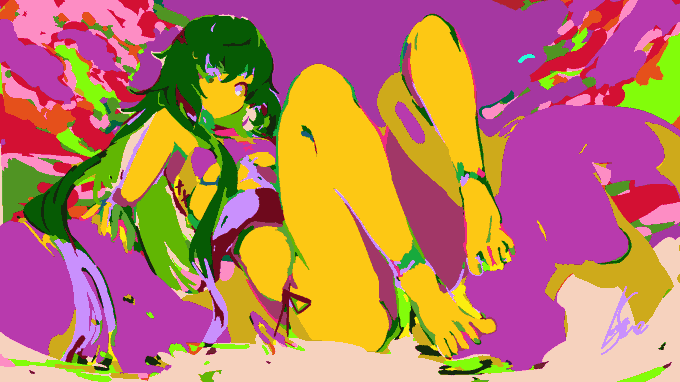

After weeks of trial and error to finetune my style using various techniques, datasets, and prompts, trying dozens of datasets / prompts, hundreds of settings and generated thousands of samples, I'm pleased with the results!

#stablediffusion

Happy New Year one and all...and we are off to a roaring start for 2023. Globally, at +0.7°C above the 1979-2000 baseline, this is the highest anomaly I have witnessed for this dataset. @MichaelEMann @KHayhoe @ClimateOfGavin @DrJeffMasters @ProfStrachan @BrianMcHugh2011 @ZLabe

We're surprised by how well it performs, and interestingly - the order in which the training data was organised had a significant impact on the final model.

Another training run on the same dataset in a different order didn't garner anywhere near the same consistency of results.

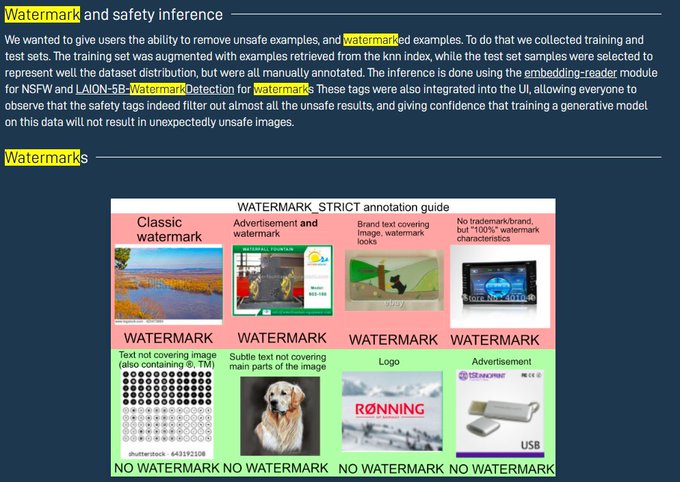

I learned something interesting about A.I. art. The datasets used in Midjourney and Stable Diffusion were created by LAION, a non-profit ai research organization. (1/7)

Let's try a prompt that's nowhere close to the dataset, how about "a zombie". 🧟♂️

This is interesting - the lower strength training (left) gives you a different feel with the pixel art and can deviate further stylistically, while the stronger runs (right) look more polished.

Very soon AI art generators won't be inspired by any art (already less than 2% of the dataset) + it will still generate art with ease + the whole "stolen remixer" narrative will collapse.

Also AI will have no "tells" like crummy hands + it'll be perfectly coherent.

Then what?

From my datasets

Flowers will grow

And this is AGI

And this is singularity

@somewhere_art @foundation A few from my latest collection. Trained from a dataset including my handmade wool felt artworks, paintings and nature photography.

@thatanimeweirdo @ifandbut01 @SarahCAndersen @Kickstarter I was pretty shocked that when I put in the name of one of my favourite singers for a music video MidJourney actually generated some accurate pictures of her. I thought this would for sure be blocked or like, not be allowed to include her face in the dataset

GM!

Still working on captioning this dataset. Hope your day is pleasant and productive!

#NFTart #AIart #omen #AIIA #psychedelicart

Yes, Twitter, I too agree that the inclusion of art in an AI dataset and claiming it doesn't exist because its 'data' Is pretty fucking ridiculous and deserves a warning, but not like this.

@somewhere_art @foundation Some recent artworks trained from my wool textile artworks datasets.

@cadmiumcoffee It would be a shame if this effect were accelerated by the deliberate feeding of image/text errors into these datasets. Potato hands landscape concept ArtStation realistic big boob.

本日はキャラクターアニメーションと画像生成系の採択論文セッションをしっかり聴講して、関連論文やリポジトリも掘っていたのですが、収穫だったのはこちら

”The DanbooRegion 2020 Dataset”

https://t.co/KFyFYb4fpr

アノテーション領域が与えられたDanbooru、といって伝わるだろうか。12人で評価。

@USClaireForce Is this Mofo serious? I already found several of my older artworks in LAION-5B dataset and I haven't even scratched the majority of it. This lying s+++ of sh+t

Exact same prompt. *Almost* the same training dataset.

To the right, I added a few more pictures to add a bit of variability. The results are much better! (details, colors).

Prediction: "dataset engineering" will be more critical than "prompt engineering".

#AI #CrackingTheCode

We've just published a whole load of Land Cover Map 1km summaries for both GB and Northern Ireland.

The datasets are now available for 2017, 2018, 2019, 2020 and 2021

https://t.co/XQEAnVY6MG