cubic eased multi-cond guidance

similar visual effect to latent walk, but I think it has better temporal coherence + the motion's easier to follow.

we're mixing denoising predictions, so it's a visual interpolation rather than a conceptual interpolation.

latent walk (spherical linear interpolation between word embeddings on CLIP's latent hypersphere) vs multi-cond guidance

the effect is similar, but pose changes more during latent walk

#stablediffusion

multi-cond guidance is back!

thanks to @cafeai_labs for the hardware.

diffusers-play can now do complex batching.

each sample can have different conditions, varied numbers of conditions, differing negative prompts, differing CFG scales, weighted multi-cond

https://t.co/r85Jc9EpXR

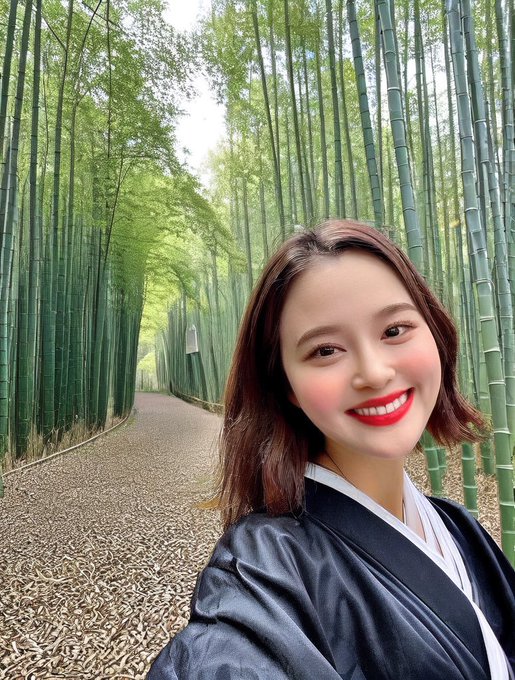

waifu-diffusion 1.5 beta is out!

trained on tag-based prompts *and* natural language

photos *and* illustrations

high-res (896x896), varied aspect ratio!

great work by @s_alt_acc @cafeai_labs @haruu1367 as usual, and any other contributors

https://t.co/5D3ZCmGSoU

finally got the Nvidia 4090 PC set up!

Python 3.11 + CUDA 11.8 + PyTorch 2.0 alpha + xformers + Ubuntu 22.10

it's so faaaassst

thanks again @cafeai_labs for sponsoring the build!

https://t.co/xzpWuYHLgl

#stablediffusion on Mac got 12% faster! (12 Unet steps 12.03->10.76s; avg of 10)

upgraded pytorch 789b143...acab0ed (Dec 23–Jan 6)

wonder if it's due to razarmehr's torch.linear() optimizations (made BERT 3x faster)

https://t.co/r5HBnpm5RW

more to come when macOS updates!

dropping out the final value head from #stablediffusion's cross-attention, and replacing it with a duplicate of the penultimate value head

different, but… fine?

https://t.co/3yrsOmw23Z

I'm not actually sure #stablediffusion is *using* all of those attention heads.

we can throw away one of the value heads used by cross-attention, and get an almost-correct result.

"One Write-Head is All You Need" suggests only _query_ needs multiple heads.

https://t.co/axPGdLmlJT

@haruu1367 @cafeai_labs it's trained on images with 640x640 area, with neither length longer than 768.

I did the maths wrong and generated an out-of-distribution image (800x768) but it came out great:

waifu-diffusion 1.4 epoch 1 is out!

supports non-square aspect ratios, triple prompt length.

includes text encoder and VAE.

thanks to the WD team (@haruu1367, @cafeai_labs , salt, +contributors & sponsors) and to NovelAI for sharing their training process.

https://t.co/t8vVqxnTkK