@GeorgeCrudo @TrevyLimited @CreativeHeart25 Maybe you're not the type to figure it out. Maybe you would if you tried longer. It's possible to get cool & distinct stuff out:

https://t.co/xidoPFn52M

Prompt-To-Prompt editing allows you to easily change your input text without needing to completely regenerating the image. This makes it much easier to control the diffusion!

Example from bloc97's GitHub, four seasons of the same scene:

Threads that discuss bias as "quite distressing", knowing the source, are no better than insidiously crafted messages that manipulate public perception.

It covers up the most important ethical angle (people's livelihood) and frames the problem in a way to divide and conquer.

With #NeuralStyle, you can just take a low-resolution image as seed for the next stage, and increase the resolution in a coarse-to-fine manner.

With CLIP+VQGAN, it doesn't really work. Everything great about the original is lost after 50 iterations:

Can't quite get to 720p yet, but 540p works... However, the appeal of the low-resolution images above is gone.

It's likely because the receptive field of the convnet becomes relatively smaller as you increase image size, same for style transfer.

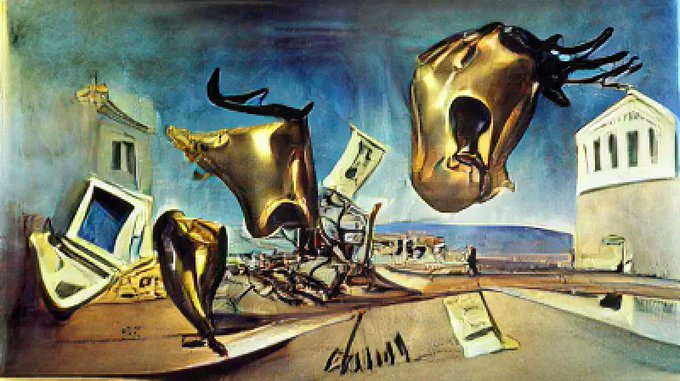

I wanted a picture that's a bit more reflective of the stock market, so I changed the prompt:

"stock market crashes financial crash stocks down chart red painting by salvador dali"

Turned out great! ✨

Next, I tried a longer prompt to suggest the style I wanted:

"stock market crashes painting by salvador dali"

Again, you see recurring themes with the same prompt, but different layouts each time.

I enjoyed working on that art style. It was a nice break from programming or other technical work!

I also found some earlier prototypes:

This week I stumbled on an old slide deck from https://t.co/gsWh0XI4wH's startup phase, this one is likely from 2018...

They were stressful times, but slides like this always made me smile!