Stable Diffusion 2.1 (base) is also supported by default in Doohickey (and diffusers in general!)

set the model name to "stabilityai/stable-diffusion-2-1-base" and go ham https://t.co/iKHHzpPG0s

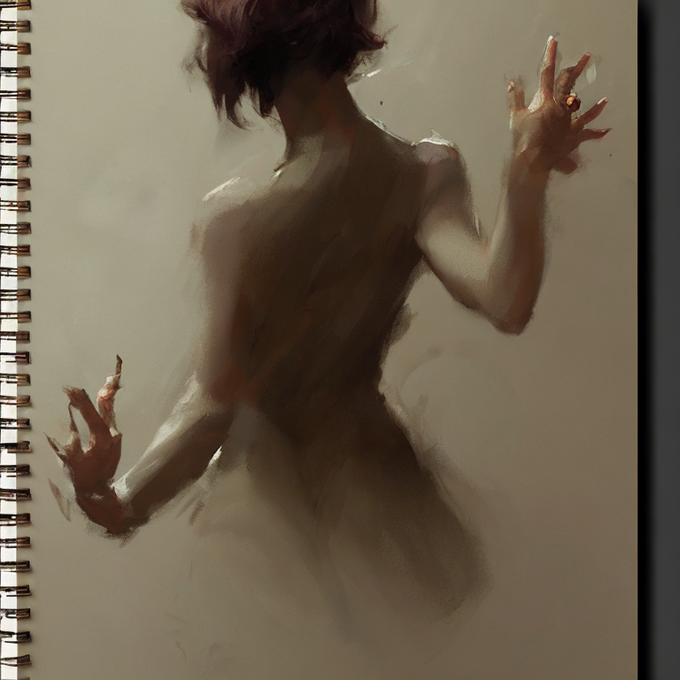

the prompts extended by my RAT (https://t.co/PvkSlrDL48) do translate okay to the v768 model ! automatic prompt engineering !!!

(also using @minimaxir 's <wrong> token in the negative with "in the style of <wrong>, amateur, poorly drawn, ugly, flat, boring")

colab demo below

I'm fine-tuning a prompt-extending GPT with RLHF for SD2.0 (by myself, I don't have a userbase to get ratings from)

Custom personal prompt engineer!

(a ton of the code was generated by ChatGPT too! here's some intermediate unconditionally generated prompts)

[BREAKING NEWS] New diffusion model coming to Doohickey/HuggingFace soon™ (probably sooner than "soon™" you've heard elsewhere) https://t.co/glZ9tFAY4s

working on a model that does higher resolution better-ish, it's not perfect and not done training

(from lexica vs from this model, both 1024x1024)

w/ text inversion for dalle paint style that doesn't work 100% but does add flavor

removing the last layer of CLIP for waifu diffusion like in NAI, I'm thinking of fine-tuning wd on the second to last layer for like just a few/10s thousand images

(removed vs not, "miku, masterpiece, best quality, miku")

so i dont have to prompt engineer for profile pictures, an anime style icon generator using stable diffusion

"1girl red hair, twintails, neutral expression, angry, short eyebrows"

https://t.co/FPppwQfeCt

i didn't realize the settings i was using were weird without perlin noise, without / with

raw ViT-H-14 guided Stable Diffusion at [640, 512] (just pushed this update properly!) https://t.co/d0q3nMptm2