Here's what a separate face model is for. Say, we created an awesome render in @Somnai_dreams #discodiffusion

But the face is anywhere but good. We take it as init, generate a few faces, pick one, paste it back, and voila - the perfect image is ready!

@KevinWPage Aaand another one. This time in Bryullov and Aivazovsky style. Wondering if it's worth minting

#aiart

We can still use 3d transforms from @gandamu_ml 's #discodiffusion without the diffusion itself

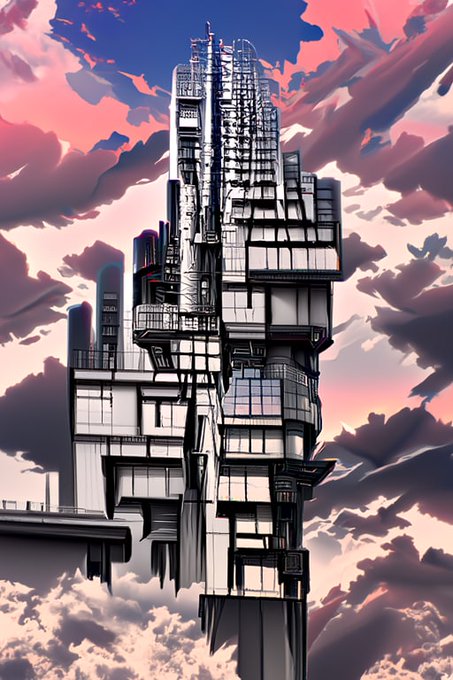

The scaffolding around a derelict hulk, being stripped of its otherworldly possessions. Aivazovsky style.

Testing brute-force x2 superresolution (extra pass with the same model)

Here's the before, and the after is down the link.

#discodiffusion #nft

https://t.co/m1ssIgLY8E

Experimenting with upscaling #discodiffusion (by @zippy731 @Somnai_dreams @gandamu_ml) results

via extra diffusion pass, realesrgan, and simple resize.

I think we can get away with just an extra pass using the same tuned diffusion model, but without clip guidance.