datasetのTwitterイラスト検索結果。 241 件中 3ページ目

So Deviantart is making their own AI graphics tool DreamUp, that uses art in deviatntart. You can from image settingas ask for your art to not be included in the dataset. (Tho DA is not responsible even if 3rd party still uses it)

@Ted_Underwood Yep super impressive but another example here (3 out of 4!).

I guess bias always exists in the datasets but this is quite obvious.

#NarutoDiffusion モデル使ってみたw

ミクさんもNARUTOに🥷

narutopedia画像をBLIPで文字化した"Captioned Naruto dataset"を #StableDiffusion で追加学習

λGPUのA6000 2基で3万step学習

12時間で$20

先にツイしたBLIP化Colabでデータ作れるし

Fine Tuning敷居が下がった?

https://t.co/VXSkIBqgoU

I used Naruto anime character pictures from https://t.co/tajQIbWLPf and captioned them using BLIP. Some captions are quite inaccurate but most of them are good enough.

The image + caption dataset is available here: https://t.co/aR6HHoL6Q0

Making hands of my dataset better everyday

#stablediffusion #NovelAIDiffusion #NovelAI #AIart

Some more Hypernetwork + Aesthetic Gradient test before bed.

1. Raw SD

2. Hypernetwork

3. Hypernetwork + Aesthetic Gradient (Same dataset)

I like both the hypernetwork with and without AG. a matter of taste i guess? GN Everyone ✨

#AI #AIart #AIartcommunity #Stablediffusion

A snapshot of my talk @NFTuesdayLA.

Q: What kind of image datasets do you use?

A: I work with my own—digitally native, built from scratch with various AI models...I sculpt with a process that’s akin to chiseling a granite block into color, texture, and lighting palettes.

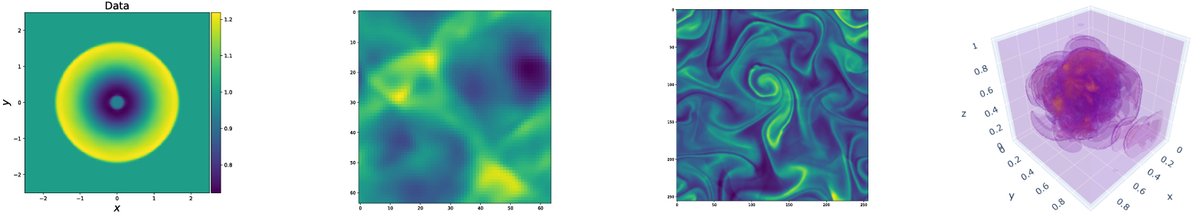

Using ML to solve PDEs is a hot topic. With PDEBench we propose a comprehensive and extensible benchmark that includes multiple datasets, (pre-trained) baseline models such as the FNO and U-Net, as well as JAX-based numerical solvers for realistic PDEs. https://t.co/NO5eyCAUAu

@Tez_Town @Tery @dns @myknash @strtsomethingio @DaedalusofCrete @_xs @objktcom @TeiaCommunity @WhaleTankNFT "Kind Giants", created for the #TezWild event by @RockyVulgar from New York, USA who just started minting a few months ago after being a collector for over a year. He was inspired by friend @EDMrSprinkles.

Created using Using a neural network built & trained w/his own datasets.

@zawalol Datasets containing thousands of images taken from artist without their consent to then be used for a meat grinder is not learning, its theft.

@Suhail I spend about 20 hours on my art

You're entitled to your own expression obviously, but I don't qualify ai art as art, when people wager that 'abstract isn't art anyone can do that' I disagree, but open-source ai that users OTHER people's art as part of its dataset isn't art...

Loving the #starxterminator plugin that finally plays nicely with @affinitybyserif and Mac - same dataset but noticeable difference imho... @StargazerRob @sjb_astro @xRMMike @clarkjames70 @PeterLewis55 @DavidBflower @Dave_StarGeezer

6 years ago

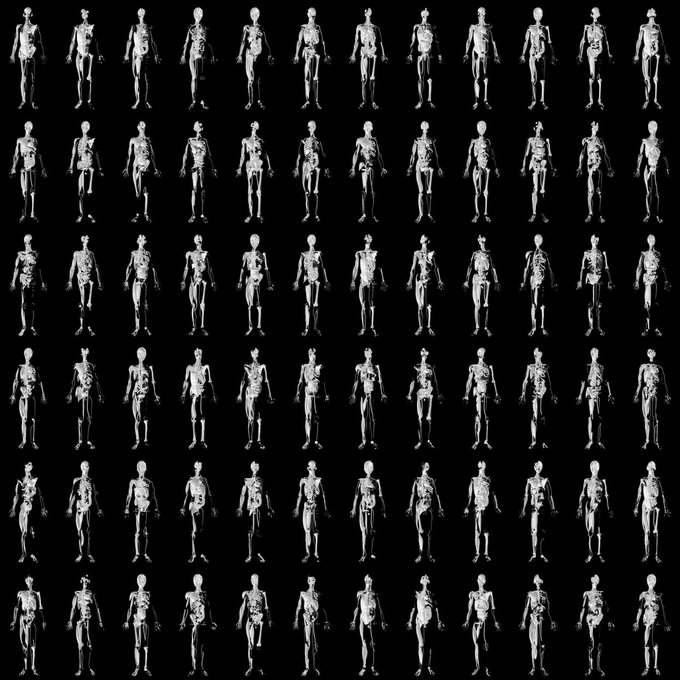

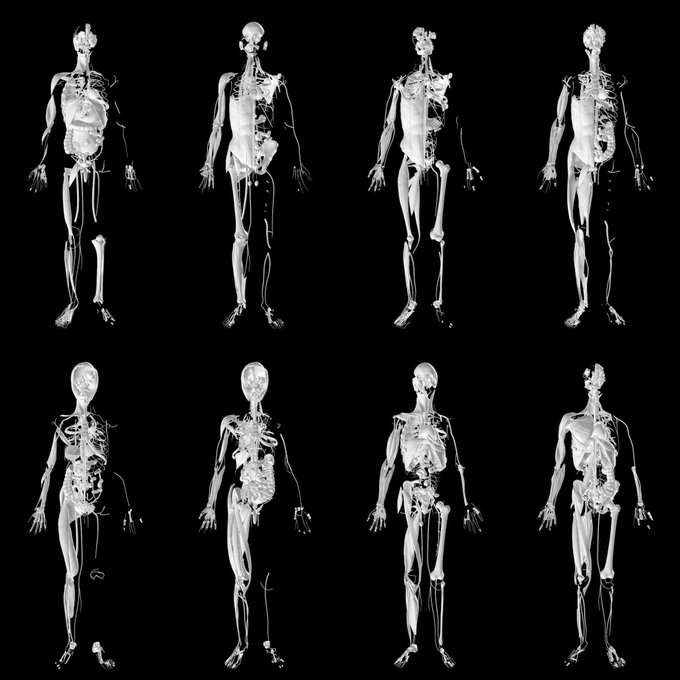

One fifth of a man

Quick Processing study of this dataset: https://t.co/gWdKGBIkOP

"BodyParts3D, © The Database Center for Life Science licensed under CC Attribution-Share Alike 2.1 Japan"

I wonder how setting up this ai worked because it clearly knew what "motorcycle batman" meant even though those words should mean almost nothing to an entirely pokemon related dataset

I fine tuned the original stable diffusion on a Pokemon dataset, captioned with BLIP. The captions aren't amazing (see this example), but they're ok. You can get my dataset here: https://t.co/enufYgHG41

@0xabad1dea i wonder why there's such a big disconnect between the results from searching the LAION dataset with CLIP, and the resutls from image generators trained on it

MidJourney dataset is filled with so many beautiful faces!

#MidjourneyAI #midjourney #AiArtwork #aiartcommunity #AI #AIart