cannyのTwitterイラスト検索結果。 5,794 件中 24ページ目

@Here4FunSun @TigheSammy The movie version reminds me of Mr electric from Sharkboy and Lavagirl mixed with the uncanny valley of an Asylum knockoff movie.

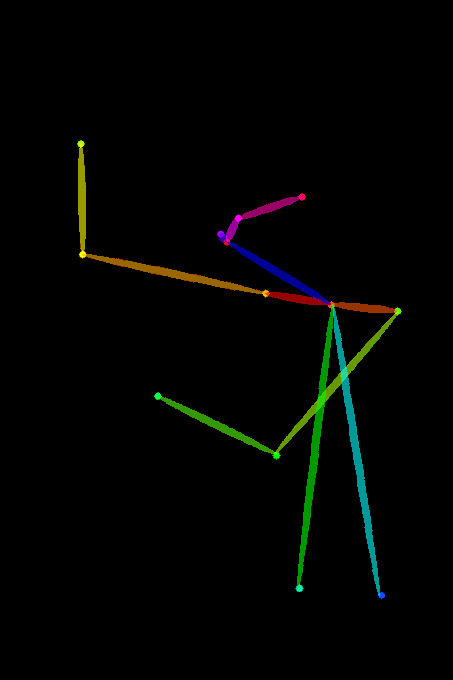

@kurisu_Amatist U can use hand detection model in preprocessor with openpose_hand

However, the training data for Hand Pose Estimation may be insufficient. It will improve

https://t.co/jcfTNeySP4

also try drawing hands with other ControlNet models such as Canny, Scribbles, Normal Map & Depth😊

After some fiddling, finally managed to get the ControlNet module to do what I wanted for the 1st time on this test image. Seems the key is to use the Canny preprocessor along with the OpenPose model. The wireframe was generated from https://t.co/IpcHIehKVu #StableDiffusion

“We shall all surely die.”

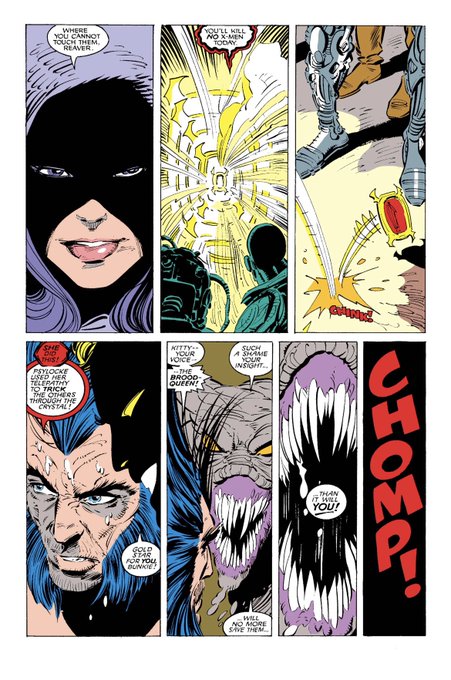

Uncanny X-Men #251. After seeing a vision of the X-Men killed by the Reavers Betsy telepathically nudges the team to use the Siege Perilous to escape. The Hand would later find an amnesiac Betsy and brainwash her into one of their deadliest assassins.

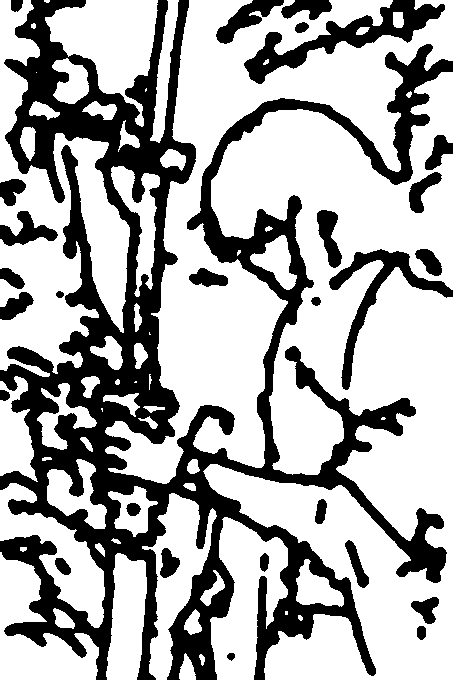

3分で書いたドラゴンの落書きを

Stable-diffusionの新プラグインControlnet/Cannyに

食べさせてみたら…

こんななりました!すご!!

このまま、デザイン案で提出できちゃう…🚀

GM ☀️

Happy Friday Degens 🍻

These are a few of my favorite projects rn 💰

What’s yours? 🤔

Always itching to snipe a new piece over the weekend, so drop em below 👇 🎯

#AcidVerse #TheUncanny #DrunkRobots #ThePlague

Donny is on the scene, staying strapped and ready for floor 🧹Friday, at @TheUncannyClub.. #UncannyNFT #uncannyclub #StayUncanny #nft

Horrors from the ends of the earth. The colder side of Christmas nights. Uncanny rhythms of the acoustic weird, and the ancient ecological power of the pagan goat god. 👻

As our #TalesoftheWeird series continues to grow, look back on the four titles we published last Autumn.

“No matter where you go…we’ll find you.”

Uncanny X-Men #328: a moment of sacrifice. Betsy jumps in to save Boom Boom from a manipulative Sabretooth. “The glow,” a method to dispel his homicidal urges is no more and Betsy would nearly be killed as a result.

ControlNetの実験2。

相方のラフ作品を借りて自動着色。

表情は変わってしまっているけど、今の自分レベルのプロンプトでここまで塗れるとなると、プロンプト突き詰めると化けるねこれは。

Anything3.0+canny

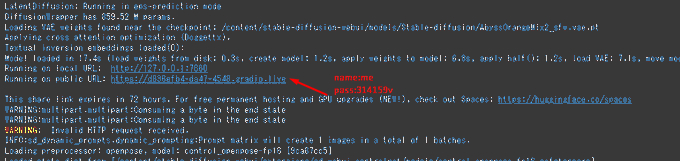

@Votatatata @otahiiiiiiiiii 技術畑じゃないから色々とエラー吐いてるしgenarateのあとcolabのタブを確認しないとうまく行ってるのかわからないけど書き出せるからヨシ。

ぼろぼろだけどデプスとポーズとcanny使えてます!

megaにアップしたのでALTを見てね。導入方法は調べてね。モバイル勢を救いたい…

あとで記事にしますけど、少しもガチャせずマジでそのままクォリティUPしたいときはdepthかcannyにすればほんとすべてがそのままの位置で描き込みだけUPしますわ~~~~!そのままimg2imgに送って最大幅に拡大しても全く問題ねぇですわ!!(吐血して死ぬ)

uncanny was always frown upon, but I am kinda getting into it https://t.co/BP8hEbrYOC

GM GM ☀️

Found the @TheUncannyClub for me this morning

Had to scoop him.

Good vibes only 🪕 ❤️ 💨

What’s your favorite member of #TheUncannyClub?

#ControlNet

少し線画に改変が起きるが

その度合いはcannyモデルと生成用モデルのブレンドが影響しているのかな?

1枚目:元線画

2枚目:UUY追加学習モデル

3枚目:UUY追加学習モデルでサンプラー変更等

4枚目:anythingv3モデル

先日出て話題のControlNetを早速導入して

色塗りを試してみた

この前描いたボッチちゃんでanythingv3とcannyモデルそして自分の絵で追加トレーニングしたHyperNetworkを適用

2枚目:anythingのみ

3枚目:UUYHyperNetwork適用

結果:だいたいそれっぽく塗れるが完全に主線に沿ってるわけではない

this is uncanny to me. there is something off somehow. but he is mounted and the background is kinda cute. is this in silesse bc of the snow??? idk. mage knight azelle, +4 (def promotion gain). 8/10. He needs to look more scrunkly.