"an illustration of a bunny with a mustache playing a guitar"

Russian on left, OpenAI on right.

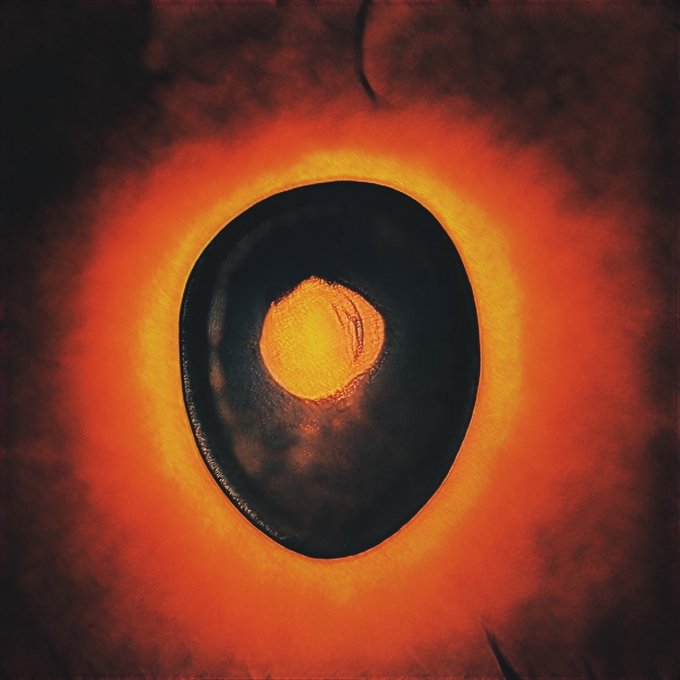

CLIP Portraiture - these are generated using a text prompt and an initialization image.

Wow. CLIP Portraiture is so much fun. 4 hours have flown by exploring different prompts and init images.

Huge thanks to @RiversHaveWings @danielrussruss for sharing their guided diffusion notebooks. And @pbaylies for inspiration, as always. 🙏

Few more samples in thread ⬇️

@ak92501 Actually - update that - 768 works too! Not sure if it was switching to a GPU instance or restarting. Great detail. (1024 still too high on Colab.)

@ak92501 I tried setting target_image_size = 512 ...works! 👍

target_image_size = 768 ...crashes. 🚫 Runs out of RAM - at least on Colab Pro.

Results ⬇️

"A cloud made of [dogs / eyeballs / love / water]."

Fascinating to use #CLIP from @OpenAI to steer #BigGAN. The text on these images are the prompts I used to guide BigGAN to the image.

Huge thanks to @advadnoun for code/Colab and @AmysImaginarium for inspiration! 🙏

Thread ⬇️

Cyrus was able to create intentional edits based on a library of thousands of generated star and cell images and their associated NumPy arrays. Thanks, as always, to @memotv for the inspiration and his 2018 @NeurIPSConf paper, Deep Meditations. 🙏

Example stills edit sequence:

Inspired by the words and personal meaning of Rumi's poem, The Guest House, we developed a model based on a dataset of micro and macro imagery of cells, galaxies and other forms representing both the building blocks of humanity and it's own place in the universe. Sample stills:

Today's reverse toonification experiments with art from @Pixar for Incredibles 2, Up, & Coco.

This framework from @EladRichardson and @yuvalalaluf quickly finds a "real" human face in the #StyleGAN FFHQ latent space. Adding some style randomness too.

More Pixar in thread! ⬇️